This fall, Assistant Professor of Electrical and Computer Engineering Omiya Hassan taught a new course on artificial intelligence (AI) and machine learning (ML) hardware systems. Students worked all semester to develop devices that showcase the implementations and inference of optimized AI/ML on edge devices such as microcontrollers with a power consumption requirement of max 50mW.

“The outcome of this course was to equip students with the knowledge necessary to implement AI/machine-learning algorithms on resource-constrained hardware systems by focusing on optimization techniques to enhance energy efficiency,” said Hassan. “These projects helped students grasp the trade-offs between efficiency and accuracy. They also successfully deployed optimized AI/ML models on microcontrollers. As an instructor, I think it is an amazing success.”

Projects included gesture and voice control for remote sensing, an automated waste management system, and a robot that makes pancakes using the “Mixture of Experts (MoE)” machine learning model. The course culminated with live demonstrations of seven successful projects.

- Emotion Detection through Speech (Ethan Barnes, Matthew Roloff, Alex Anta, and Nicholas Bentley): This project aims to develop an embedded system that can detect happiness, sadness, and anger apart from a neutral tone from the tone and volume. Our project hopes to enhance applications with interactions between man and device.

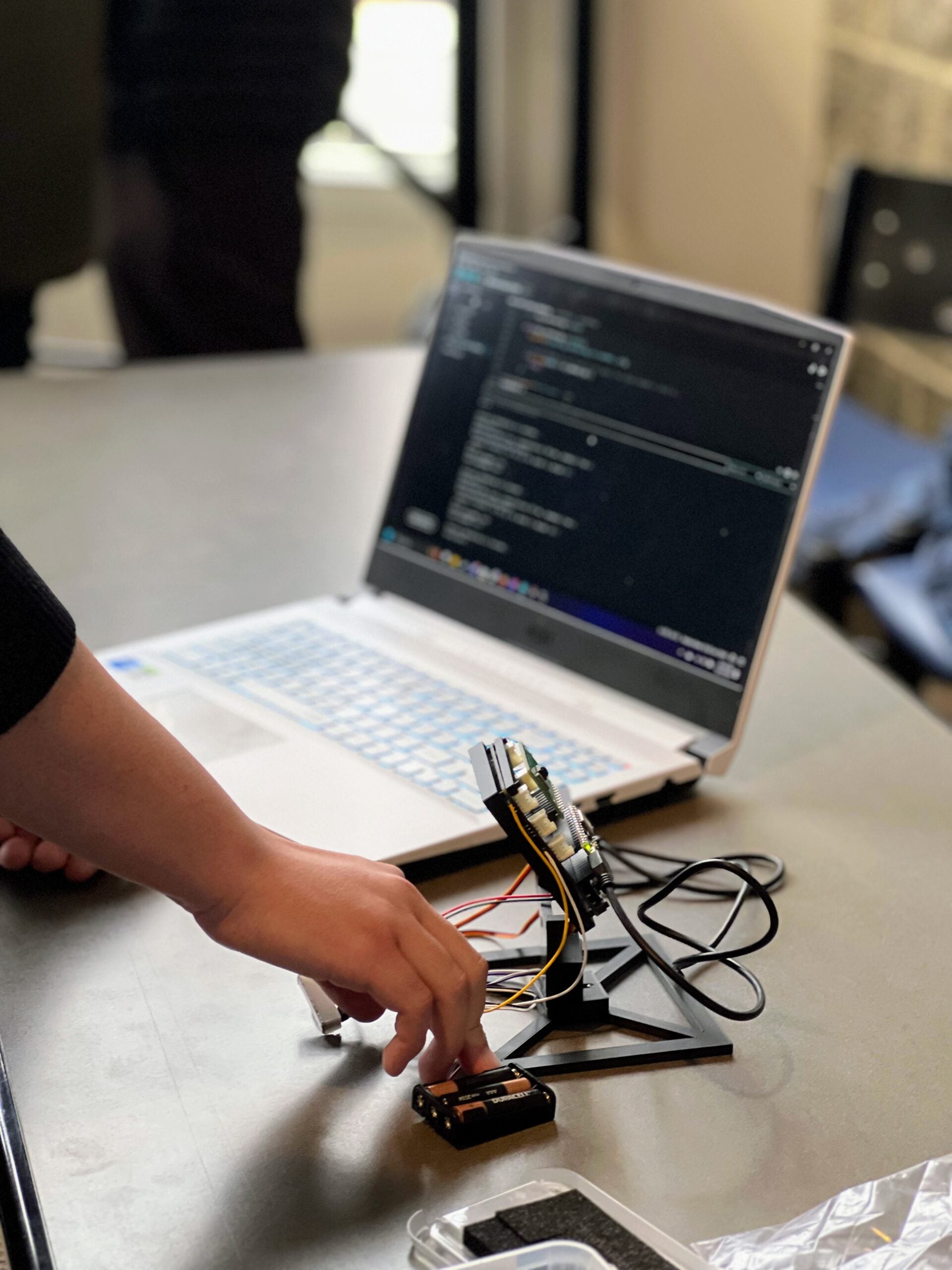

- Motion Tracking Device for Real-Time Face Tracking (Adam McCall, Gabe Perez, Diego Ortiz, and Vlad Maliutin ): A motion-tracking device using TinyML for real-time face tracking on edge devices enables autonomous, low-power operation. This system eliminates reliance on cloud processing by embedding a lightweight machine-learning model into a microcontroller, making it ideal for portable and secure applications.

- Depth Estimation With Quantization (Nio Pedraza, Adam Torek, and Carson Keller): A Case Study: Depth estimation is useful for autonomous devices. Historically, this functionality requires dedicated auxiliary modules. Machine learning models are capable of this ability, but their parameter size makes them difficult to put on edge devices. This project explores quantizing depth estimation models to potentially deploy them for embedded applications.

- Gesture & Voice TV Remote Control (Eric Kondratyuk and Daniel Uranga): Have you wished you could use gestures to control the volume of your devices? What do you think about your voice? The goal was to use gesture controls to change volume, pause/play, and use voice commands to turn on or off the remote. All of these controls should achieve a minimum accuracy of 85%.

- ASL Alphabet Recognition on an Embedded System (Ian Varie, Colton Gordon, and Parker Parrish): Deep learning models often require substantial computational resources that are not feasible for small embedded systems. To address this challenge, we focused on optimizing a MobileNetV2 classifier for ASL alphabet recognition to run on the resource-constrained Arduino Nano 33 BLE.to deploy them for embedded applications.

- Pancake Robot (Oliver MacDonald and Chris Dagher): This project introduces a novel, AI-powered approach to small-scale pancake cooking by utilizing a Mixture of Experts model that processes multi-modal sensor data from both a webcam and a thermal camera. Unlike existing industrial solutions that use rigid, energy-inefficient conveyor systems, this solution provides a flexible and adaptable cooking method based on the pancake batter’s type and stage of cooking.

- Automated Waste Sorting with Edge AI (Ashraf Shihab and Md Mahmudul Kabir Peyal): The goal is to develop a smart waste-sorting system that uses Edge AI to automatically classify and separate recyclable and biodegradable materials, making waste management efficient and sustainable.